Vast Majority of Drug Ads in Leading Medical Journals Don’t Pass MDs’ Sniff Test! Over Half Failed to Quantify Serious Risks, Including Death

A study led by Mount Sinai School of Medicine researchers of 192 pharmaceutical advertisements (83 full unique advertisements) in biomedical journals found that only 18 percent (15) were compliant with Food and Drug Administration (FDA) guidelines, and over half failed to quantify serious risks including death. The study was published online on August 17, 2011 in the journal Public Library of Science (PLoS) One (here; also see press release here).

I’ve had some communication about this study with Dr. Deborah Korenstein, MD, Associate Professor of Medicine at Mount Sinai School of Medicine, lead author of the study, and Joseph S. Ross, MD, Section of General Internal Medicine, Yale University School of Medicine, a co-author of the study.

The major question I had was what “FDA guidelines” did they use to measure compliance against? The article was very non-specific in that regard, but I was sent the “Master Guide for Ad review,” which was used to assess compliance with 20 FDA “Guidelines” (find the Guide attached to this post).

Actually, “guidelines” is the wrong term to use because what the authors used to create their assessment “Master Guide” was the original FDA “Regulations” (ie, Code of Federal Regulations Title 21, Sec. 202.1 Prescription-drug advertisements; find it here).

For example, one of the assessment items asks reviewers: “Are there unsupportable efficacy claims related to comparisons with other drugs?” In support of that, the authors cite this language in the Code: “Contains a drug comparison that represents or suggests that a drug is safer or more effective than another drug in some particular when it has not been demonstrated to be safer or more effective in such particular by substantial evidence or substantial clinical experience.” This is just one of the reasons that would make an ad “false, lacking in fair balance, or otherwise misleading” according to the regulations.

So, in short, the authors played the role of DDMAC reviewers and, based on THEIR interpretation of the regulations, determined if the ad under review was non-compliant with FDA “guidelines.”

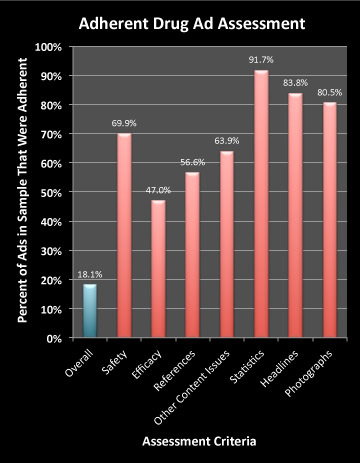

I took the liberty of plotting some of their results. Looking at the glass half-full, the following chart shows Percentage of Ads that are completely ADHERENT with FDA “guidelines” according to this study:

When assessing content, the reviewers looked at issues relating to “Safety,” “Efficacy,” and “References,” as well as other content issues.

As can be seen in this chart only 47% of the ads (N=83) were deemed adherent to FDA “guidelines” regarding efficacy. The questions authors asked themselves when reviewing the efficacy claims in ads were:

- Does the ad quantify benefits?

- Were appropriate efficacy data or numbers presented?

- Are there unsupportable efficacy claims related to comparisons with other drugs?

- Are efficacy claims based on non-clinical data?

- Are there unapproved efficacy claims?

- Does the ad make unfounded claims regarding competitor drugs or does it claim inaccurately that advertised drug differs from other drugs?

For each question, the reviewer could check off “Yes,” “No,” “Not Sure,” or “NA.” Reviewers used language from the FDA regulations cited above to subjectively decide the answer. For example, in support question 4, the assessment guide quotes this language from the FDA regulations: “Contains …data or conclusions from non-clinical studies … which suggests they have clinical significance when in fact no such …significance has been demonstrated.” Ads that fit that description — as subjectively determined by the reviewer — were judged “Non-Adherent.”

According to the researchers:

“Advertisements were considered adherent if they contained none of the 21 [actually 20 according to their “Master Guide”] features used by FDA to classify advertisements as misleading, non-adherent if they contained one or more of the features used by FDA to classify advertisements as misleading, and possibly non-adherent if there were no features clearly defining a misleading ad but at least 1 of those items for which information was incomplete.”

Sometimes, the reviewer was “not sure” if the ad was adherent or not. In the case of efficacy claims, 31.3% of the ads fell into this category, while 21.7% were judged “non-adherent.” The remainder — 47% — were “adherent” as shown in the chart above.

Well, if the MD reviewer is “Not Sure,” maybe an actual FDA reviewer might also not be sure. But in that case, the ad would be put into the “adherent” bin. In other words, an FDA reviewer might consider that 78.3% (47% + 31.3%) of the ads were actually adherent with regard to efficacy claims.

The problem, note the authors, is that the FDA is NOT catching the ads that according to them are obviously non-adherent. Why not? “The limited resources of the FDA’s Division of Drug Marketing and Advertising [DDMAC] are a major barrier to successful regulation of the pharmaceutical industry’s multi-billion dollar marketing budget,” said Dr. Korenstein who notes in the research paper that “DDMAC’s fiscal year 2008 budget of $9 million is dwarfed by the pharmaceutical industry’s $58 billion marketing budget.”

“We are hopeful that an update in FDA regulations, with increased emphasis on the transparent presentation of basic safety and efficacy information, might improve the quality of information provided in physician-directed pharmaceutical advertisements,” said Dr. Korenstein.

[This article originally appeared in Pharma Marketing Blog. Make sure you are reading the source to get the latest comments.]

PMN1013-05

Issue: Vol. 10, No. 13

Publication date: 23 August 2011

Word Count: n/a

Find other articles in related Topic Areas:

![6 Digital Tools at the Center of Healthcare Digitalization [INFOGRAPHIC]](http://ec2-54-175-84-28.compute-1.amazonaws.com/pharma-mkting.com/wp-content/uploads/2021/04/6DigitalTools_600px-218x150.jpg)

![6 Digital Tools at the Center of Healthcare Digitalization [INFOGRAPHIC]](http://ec2-54-175-84-28.compute-1.amazonaws.com/pharma-mkting.com/wp-content/uploads/2021/04/6DigitalTools_600px-100x70.jpg)